Errors in data products

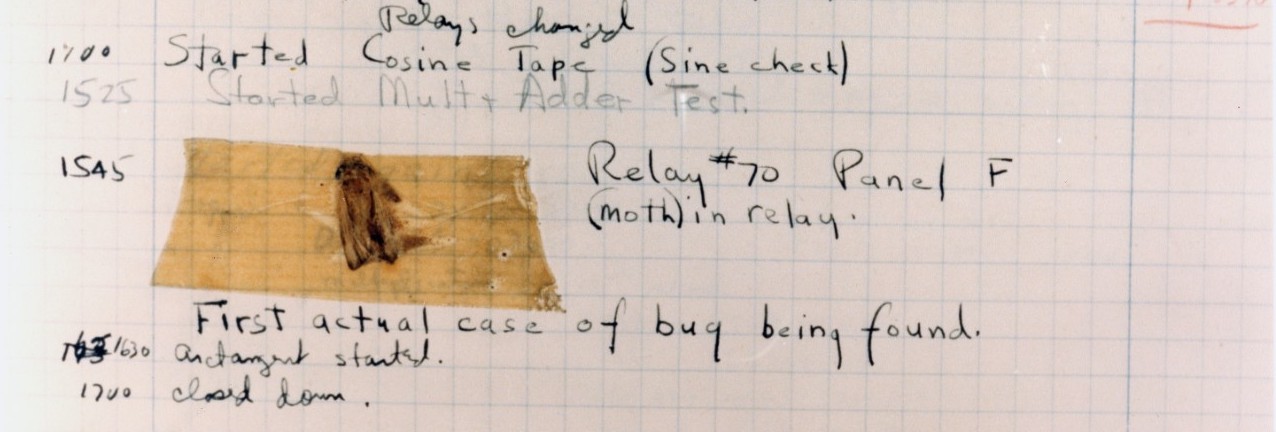

The First “Computer Bug,” a moth “found trapped between points at Relay #70, Panel F, of the Mark II Aiken Relay Calculator while it was being tested at Harvard University, 9 September 1945.” Photo credit: Naval History and Heritage Command NH 96566-KN

The First “Computer Bug,” a moth “found trapped between points at Relay #70, Panel F, of the Mark II Aiken Relay Calculator while it was being tested at Harvard University, 9 September 1945.” Photo credit: Naval History and Heritage Command NH 96566-KN

All non-trivial software has bugs. It is possible to write provably correct software, for some definition of that, but it’s very expensive and almost nobody does it. Instead, much software development today is based around philosophies like “minimum viable product” and “move fast and break things.”

Errors, the thinking goes, can be fixed in some later update. In the meantime, if your program crashes or a feature doesn’t work, users will just restart or reload or work around it.1

Sometimes that approach works, and sometimes not. And the kinds of errors that we find in data products makes this especially fraught. My taxonomy of software errors is this:2

- Compile time errors cause an obvious visible failure during the build phase of a project, e.g. a compiler fails with an error.

- Run time errors cause an obvious visible failure once the project is in use (ideally in testing, but quite possibly in production), e.g. a program crashes or a website returns “500 internal server error.” These are unambiguous error states, obvious to any user.

- Logic errors do not cause any obviously abnormal or out-of-band behavior, but cause incorrect output. You can further subdivide these into visible logic errors—those that a user might detect as out-of-expectations—and invisible logic errors, which look plausible but are still wrong.3

Data scientists usually build and deploy our deliverables—models, data sets, even decisions—as software. So data science deliverables have bugs. How then, does this taxonomy apply?

Compile time errors are great. They’re found quickly and with basic processes they should never propagate beyond a development environment or even a single developer’s machine. Unfortunately these are less relevant to data science, because we don’t use a lot of compiled languages.

Run time errors are more common in data science, and they’re worse because they can escape to production. In languages like Python and R even syntax errors can manifest as run time errors, not discovered until a particular branch is taken for the first time. That’s bad: it means your carefully trained model will fail in production. But it’s often not a disaster, because your model is just one component of a somewhat fault-tolerant human-computer system. Worst case, someone will recognize the error, pull the plug and switch to manual processes.

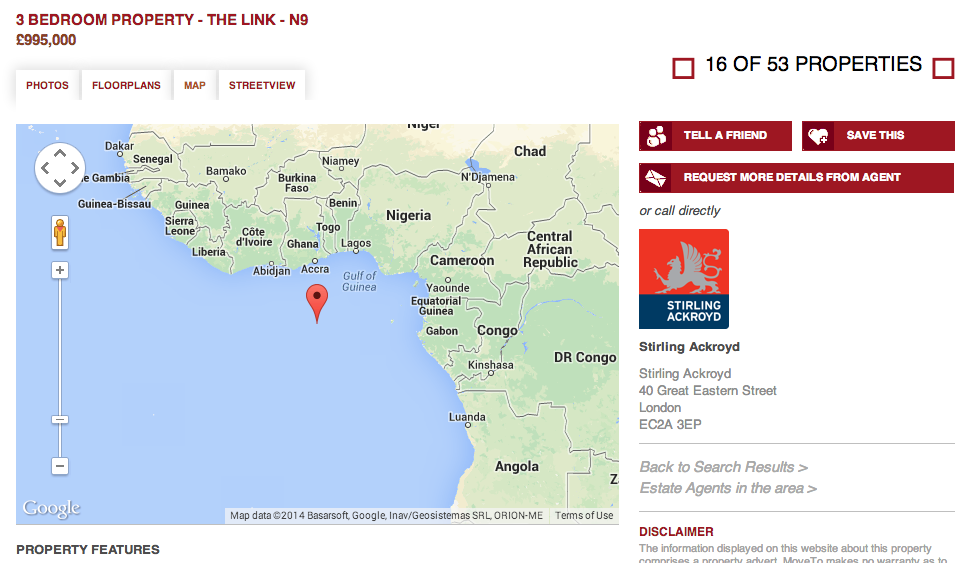

Visible logic errors are worse again: they might be caught by humans but can easily slip past automated systems. An automated system might check, say, that longitude is in the range -180 to +180 and latitude in the range -90 to +90, but it won’t necessarily recognize that (0, 0) is a pretty implausible location.4

When your latitude’s nil and your longitude’s nought, that’s Null Island: valid but incorrect data, but at least it’s obviously incorrect. Screenshot credit: Mostly Maps

When your latitude’s nil and your longitude’s nought, that’s Null Island: valid but incorrect data, but at least it’s obviously incorrect. Screenshot credit: Mostly Maps

It’s the invisible logic errors that are the real killer in data science. Maybe you swapped two columns in an input file. Or you forgot to handle NAs. Or you didn’t check whether some algorithm converges, and this time it didn’t—but it output a number anyway. Invisible logic errors are indidious. It might be days, months or years before such an error discovered—if ever. Meanwhile, some decision maker has been making incorrect decisions based on it.

The only saving grace of these errors is that since they weren’t obviously wrong, and people are natural Bayesians, they probably aren’t that wrong. But a bit wrong can still be very costly. The Reinhart-Rogoff spreadsheet incident is a now-classic example of such an error, one that quite possibly altered decisions affecting tens of milions of people during the depths of the financial crisis.

Minimizing and detecting such errors is critical to doing data science well. “Move fast and break things” doesn’t work very well in data science, because it’s so easy to break things without realizing anything is broken.

(Incidentally, people just getting into data science tend to worry about these errors in exactly the wrong order. They inevitably spend a lot of time resolving compile and run-time errors, so that once a model runs and produces results, they’re far too quick to accept those results as correct. But getting it to run is the start line, not the finish line.)

-

There are, of course, pockets of more rigorous software development, in embedded systems space or aviation or healthcare or automobiles, where bugs can be deadly; or in finance, where they can be incredibly expensive. But most developers don’t work in such a setting (and those who do probably aren’t blogging about it). ↩

-

Others may use these terms a little differently. Another person’s run time errors, in particular, might encompass some of what I call logic errors. But I find this particular distinction useful.

Secondly, these categories depend on the tools and systems being used: according to my taxonomy, the Mars Climate Orbiter failed in 1999 due to a logic error that could easily have been a compile time error under a different type system.

Thirdly, obviously, this is a narrow perspective on software errors, encompassing only coding errors. More broadly there are, for example, specification errors—where you build the right solution to the wrong problem—user interface, documentation and training errors—where the software does the right thing but the user does the wrong thing, such that the overall system still fails—and even project management errors, where the software is fine but took much longer or cost much more than planned. I’m not talking about those here. ↩

-

Clearly this division depends on the user: their expertise, their expectations, their context, their attentiveness, and so on. ↩

-

This is one reason you should inspect, summarize and visualize data, at input, intermediate and output stages. A well thought-out dataviz can put thousands of data points on the screen for a smart human to examine, highlighting otherwise undetectable errors. To a human, a house located on Null Island is obviously wrong. ↩

Add comment

Comments are moderated and will not appear immediately.